Three Ways to Build AI Group Chat: Why We Chose the Hard Path

The Question That Started Everything

"Why can't each character have their own message bubble, like other apps?"

We get this question a lot. And honestly, it's a great question. Most chat apps show messages as separate bubbles - one per person. So why does our group chat combine multiple character responses into a single message?

The answer isn't laziness or oversight. It's a deliberate engineering choice born from months of experimentation with three fundamentally different approaches to AI group conversations.

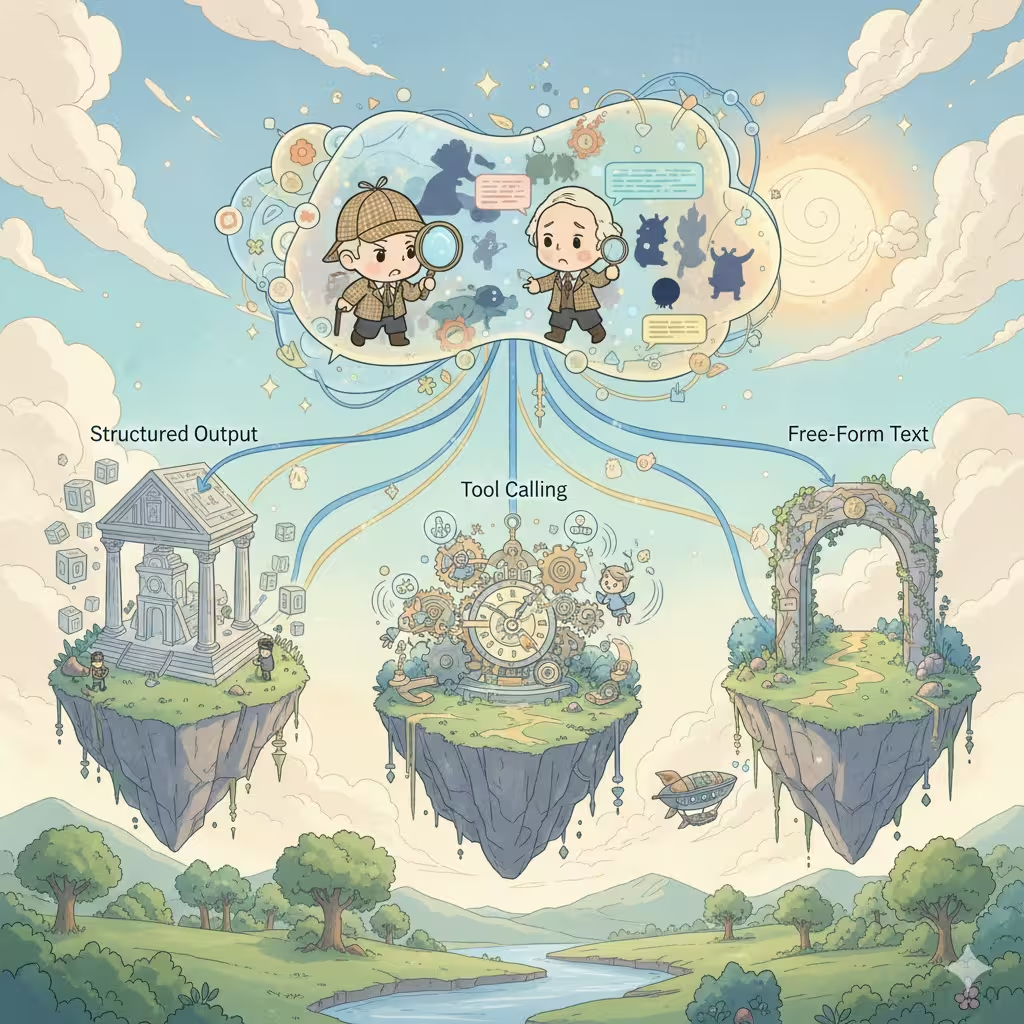

The Three Architectures

When building multi-character AI conversations, every platform faces the same decision. There are exactly three ways to do it, each with profound implications for cost, quality, and user experience.

1. Structured Output (JSON Arrays)

The most common approach in the industry. You ask the AI to return a JSON array where each element represents a character's response:

[

{

"speaker": "Sherlock",

"emotion": "intrigued",

"content": "Fascinating. The mud pattern suggests..."

},

{

"speaker": "Watson",

"emotion": "confused",

"content": "Holmes, what do you mean?"

}

]The Appeal:

- Single API call, single credit consumption

- Easy to parse and render as separate message bubbles

- Can include rich metadata (emotions, actions, scene descriptions)

- Perfect for generating user reply suggestions

The Reality:

- Only expensive premium models (Claude, GPT-4) support structured output reliably - most affordable models struggle with consistent JSON formatting

- Format errors break the entire response

- JSON instructions consume tokens, reducing creative space

- Models feel "constrained" - creativity often suffers

- Content restrictions become stricter: structured output mode often triggers more aggressive content filtering, making mature or edgy roleplay scenarios more likely to fail

- Context pollution: your conversation history fills with JSON structures

- Error handling complexity: what happens when parsing fails mid-stream?

Most third-party character platforms use this approach. It works, but the constraints are real.

2. Tool Calling (Agent Mode)

The most "intelligent" approach. The AI decides which character should speak next, calls a tool to indicate this, then generates that character's response. Repeat until the scene feels complete.

AI thinks: "Watson should react to this revelation"

→ calls tool: next_speaker("Watson")

→ generates Watson's response

→ AI thinks: "Now Sherlock would interject"

→ calls tool: next_speaker("Sherlock")

→ generates Sherlock's response

...

The Appeal:

- Most natural conversation flow

- AI has full creative control over scene pacing

- Each character response gets dedicated generation quality

- Naturally produces separate messages per character

The Reality:

- Multiple API calls = multiple credit charges

- Latency compounds: N characters = N round trips

- Only high-end models (Claude, GPT-4) handle tool calling reliably - cheaper models often fail or hallucinate tool calls

- Complex state management across calls

- Risk of infinite loops or unexpected termination

- Debugging nightmares: issues are hard to reproduce

This is the "dream architecture" that looks beautiful on paper but creates operational headaches at scale.

3. Free-Form Text Output (Our Current Choice)

The simplest approach. Ask the AI to write the scene naturally, letting it decide how to present multiple characters in flowing prose:

Sherlock leaned forward, eyes sharp. "Fascinating. The mud

pattern suggests our suspect came from the east side."

Watson frowned. "Holmes, what do you mean? It's just mud."

"Just mud?" Sherlock smiled. "My dear Watson, there's no

such thing as 'just' anything."

The Appeal:

- Works with every AI model, no special features required

- Maximum creative freedom - AI writes naturally

- Clean context: conversation history reads like a novel

- Excellent streaming experience

- Single call, predictable costs

- Simplest to implement and maintain

The Reality:

- All characters in one message block

- Can't easily regenerate a single character's response

- UI flexibility is limited

- Users expecting chat-style bubbles may feel confused

We Learned This the Hard Way

Here's something we haven't shared publicly before: our first version of group chat used tool calling.

We believed in the "dream architecture." AI deciding who speaks next, each character getting dedicated generation, beautiful separate message bubbles. It was elegant. It was intelligent. It was also a disaster in production.

Users experienced unpredictable costs - sometimes 3x what they expected for the same conversation. Response times varied wildly depending on how many characters the AI decided to involve. Cheaper models would hallucinate tool calls or get stuck in loops. Our error logs filled with edge cases we never anticipated.

After months of patches and workarounds, we made the difficult decision to rebuild from scratch with free-form text output. It felt like a step backward. But sometimes the "less intelligent" solution is the smarter choice.

Why We Made This Choice

After testing all three approaches extensively - and shipping one to production - we chose free-form text for group chat. Here's why:

Stability Over Features - Structured output fails unpredictably. When your group chat breaks mid-conversation, users don't care about separate bubbles anymore - they just want it to work. Free-form text never fails due to format issues.

Quality Over Quantity - Constrained formats subtly reduce AI creativity. When we compared outputs, free-form consistently produced more vivid, natural-feeling character interactions. The AI could focus on storytelling instead of JSON syntax.

Cost Predictability - Agent mode charges per character per response. A five-character scene could cost 5-10x more than expected. Users deserve predictable pricing.

Universal Compatibility - We support multiple AI models. Not all support structured output or tool calling equally well. Free-form text works everywhere, giving users more model choices.

The Trade-Off We Accept

Yes, we sacrifice the "one bubble per character" experience. But we gain:

- Rock-solid reliability

- Better creative quality

- Predictable costs

- Broader model support

- Cleaner conversation history

For group roleplay, where immersion matters most, we believe this trade-off is worth it.

What's Coming: Story Mode

Here's something exciting: we're building a new Story Mode that uses structured output.

Why the different approach? Story Mode has different priorities:

- Precise scene control matters more than freeform creativity

- Rich metadata (camera angles, music cues, chapter breaks) adds value

- The format is more predictable (clear chapter/scene structure)

- Users expect a more "produced" experience

Different use cases deserve different architectures. We're not religious about any single approach - we pick what serves users best.

The Honest Truth

There's no perfect solution to multi-character AI conversations. Every approach trades something valuable for something else.

Other platforms that show separate bubbles? They're likely using structured output and accepting its limitations. Platforms with more "intelligent" scene control? Probably tool calling with higher costs and latency.

We chose the path that prioritizes what our users value most: reliable, creative, cost-effective group roleplay.

The separate bubble experience is nice. But not at the cost of everything else.

What We're Exploring

We're experimenting with hybrid approaches:

- Post-processing parsing: Using lightweight models to split free-form text into character segments after generation

- Optional structured mode: Letting power users choose structured output when they need precise control

- Smart scene detection: Automatically identifying natural break points for better UI presentation

The goal isn't to find the "right" answer. It's to keep improving the experience while maintaining what works.

Have thoughts on how group chat should work? We'd love to hear from you.

Ready to Experience Dynamic AI Conversations?

Join thousands of users already exploring infinite personality and engaging interactions on Reverie.